Reading Handwritten Pages With Azure and Optical Character Recognition

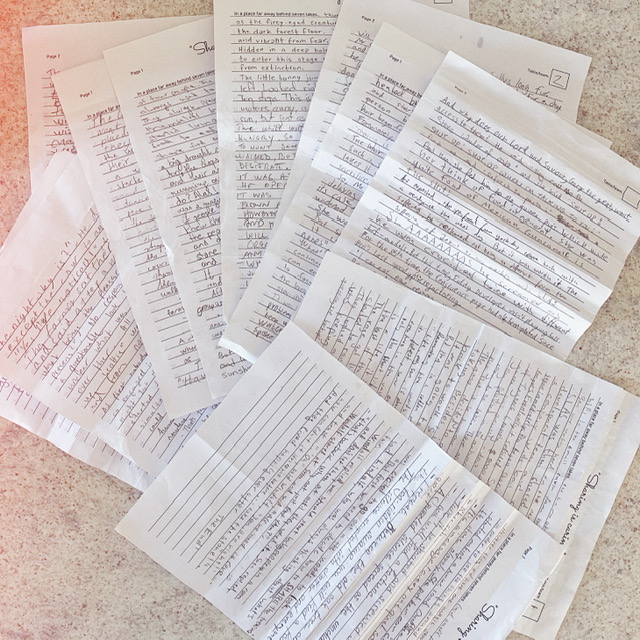

As you might have noticed from previous posts, I absolutely love Azure Cognitive Services and use them a lot. Last week we had an offsite with work and my team planned a dinner activity for everybody. Divided into 7 groups, they had to write a story together. The first person writes a short paragraph, folds down the paper so only the last sentence can be seen and passes the paper to the next one. We had not thought about the amount of work required to read 14 pages of drunken handwriting from 45 different people!

However, I had the sampled from my last Azure Cognitive Services session for a user group (you can find the Azure Cognitive Services Samples on GitHub) and used them to digitalize the pages. It worked surprisingly well. We only had to tidy up a tiny bit. And once I had the digital format I created some audio versions, and even had fun translating them to different languages (using the same services and my samples) as we are a multicultural company.

What are Azure Cognitive Services?

Azure Cognitive Services is a set of machine learning algorithms that can add cognitive features to applications. These features include but are not limited to text and image recognition, natural language processing, sentiment analysis, and speech recognition. These powerful algorithms are available through APIs that can be easily integrated into applications, making it easy for developers to add advanced cognitive features to their applications. Some of the most popular Azure Cognitive Services APIs include the Text Analytics API for analyzing text, the Computer Vision API for recognizing and classifying images, the Language Understanding Intelligent Service (LUIS) for understanding natural language input. The capabilities of Azure Cognitive Services are extremely powerful, and they can be used to create a wide range of applications that are capable of understanding and interacting with humans. Expect more posts from me on the topic, but for now, here is the tiny code snipped that saved us hours of work!

Code sample:

public async Task ReadFromLocalImage(string endpoint, string key, string localFile) {

var client = new ComputerVisionClient(new ApiKeyServiceClientCredentials(key)) { Endpoint = endpoint };

var textHeaders = await client.ReadInStreamAsync(File.OpenRead(localFile));

string operationLocation = textHeaders.OperationLocation;

Thread.Sleep(2000);

const int numberOfCharsInOperationId = 36;

string operationId = operationLocation.Substring(operationLocation.Length - numberOfCharsInOperationId);

ReadOperationResult results;

do {

results = await client.GetReadResultAsync(Guid.Parse(operationId));

}

while ((results.Status == OperationStatusCodes.Running ||

results.Status == OperationStatusCodes.NotStarted));

var textInImageResult = results.AnalyzeResult.ReadResults;

foreach (ReadResult page in textInImageResult) {

foreach (Line line in page.Lines) {

Console.WriteLine(line.Text);

}

}

}Comments

Last modified on 2022-05-10